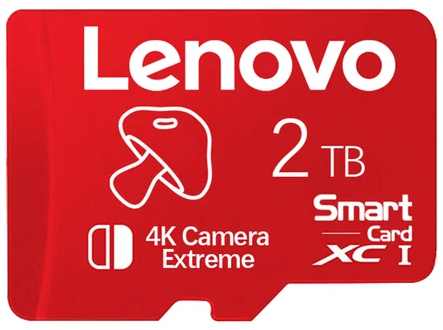

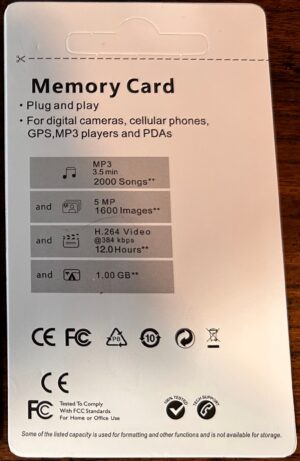

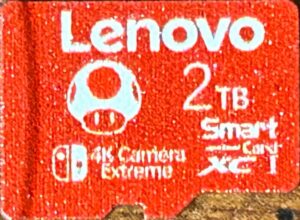

- Obtained from: AliExpress

- Advertised capacity: 2TB

- Logical capacity: 2,147,483,648,000 bytes

- Fake/skimpy flash: Fake flash

- Protected area: 0 bytes

- Speed class markings: None

Discussion

As with the 128GB above, I wanted to get a card that was obviously fake flash and one that could have been ambiguous to see if only one would be fake, or if they would both be fake. It turns out they were both fake. (I later purchased two additional samples after deciding that I should try to have at least three samples of each model that I’m testing.)

Disclaimer: I don’t believe Lenovo had anything to do with this card. I think this is an unlicensed knock-off — hence why “Lenovo” is in quotes.

This card continues the fake flash trend of terrible performance. While it did better on the sequential write and random write tests than its 128GB sibling, it did about the same on the sequential read and random read tests. With the exception of the random read scores for samples #2 and #3, all results were below average — and even those two barely made it above average. Sequential read scores for all three samples were more than one standard deviation below average, with the worst score of the three putting it in the 14th percentile. As with the 128GB card, the seller only put the UHS-I mark on this card — there are no speed class marks to evaluate for.

On the endurance front, this model endured far better than its 128GB sibling: Sample #1 completed 5,601 read/write cycles before showing any errors; sample #3 completed 8,311 read/write cycles before showing any errors; and sample #2 has yet to show any errors.

Curiously, with sample #1, when data mismatch errors occurred, they were mostly happening as a single group of 64 contiguous sectors — with the data read not at all seeming to resemble the data written — which are then separated by a number of read/write cycles where no data mismatch errors occur. The number of rounds between data mismatch errors seemed to be random, ranging from as few as 5 to as many as 625. This implies to me that the card was employing some sort of wear leveling (probably dynamic wear leveling), the bad sectors weren’t being detected by the card, and the card was simply recycling the bad sectors as part of its wear leveling algorithm.

During round 11,626, it started experiencing a number of missing data errors; however, the number of sectors affected during this round — as well as the next 5 rounds — represented only a fraction of a percent of the total sectors on the device. However, this escalated during round 11,632 — during this round, the total number of bad sectors on the device went from 0.126% to 3.52%. By the end of round 11,633, that number shot up 43.089%. Sometime during round 11,634, the card stopped responding to commands altogether, and the endurance test was considered complete at that point.

Sample #1 completed 5,601 read/write cycles before showing any errors. Curiously, when data mismatch errors occurred, they were mostly happening as a single group of 64 contiguous sectors — with the data read not seeming to resemble the data written at all — which were then separated by a number of read/write cycles where no data mismatch errors occurred. The number of intervening rounds seemed to be random, ranging from as few as 5 to as many as 625. This implies to me that the card was employing some sort of wear leveling technique (probably dynamic wear leveling), it wasn’t detecting the bad sectors, and it was simply recycling those portions of the card as part of its wear leveling algorithm.

During round 11,626, it started experiencing a number of missing data errors; however, the number of sectors affected this round — as well as over the next five rounds — represented only a fraction of a percent of the total sectors on the device. However, this escalated during round 11,632 — during this round, the total number of bad sectors on the device went from 0.126% to 3.52%. By the end of round 11,633, that number had shot up to 43.089%. Then, sometime during round 11,634, the card stopped responding to commands altogether.

Since this card made it so close to the 50% failure threshold, I’ll go ahead and post a chart showing its progression:

- Sample #2’s first error was a series of bit flips, affecting two contiguous sectors, during round 10,583. It has survived 12,558 read/write cycles in total so far.

Sample #3’s first error was a series of bit flips, affecting two contiguous sectors, during round 8,311. It managed to survive a total of 11,757 read/write cycles before it crossed the 50% failure threshold. Here’s the graph of this card’s progression:

November 24, 2024 (current number of read/write cycles is updated automatically every hour)